[Photograph by lynnalynn0 under Creative Commons]

Sujit Nair was perplexed. Last year, the global consulting firm he worked for had invested a considerable sum in measuring employee engagement. The survey had helped the firm identify four areas that offered potential for improvement. It told them these areas were important to their people but it didn’t tell them what was driving the low scores, or what they could focus on to improve them.

Sujit was asked to head a cross-functional team to understand one of these areas and design interventions to correct the situation. The team had brainstormed for hypotheses. This was followed with surveys and group discussions to confirm or reject the individual hypothesis. Recommendations based on the results were presented to the senior leadership. Some recommendations were accepted and interventions actioned. The survey was repeated this year and the scores were even lower. Sujit had no idea what had gone wrong.

Over the last few years my work has convinced me that such problems occur because we are trying to solve human issues with approaches that we use to solve issues such as those relating to machines or markets. The flawed assumption is that even in organizational behaviour a certain level of predictability and order exists.

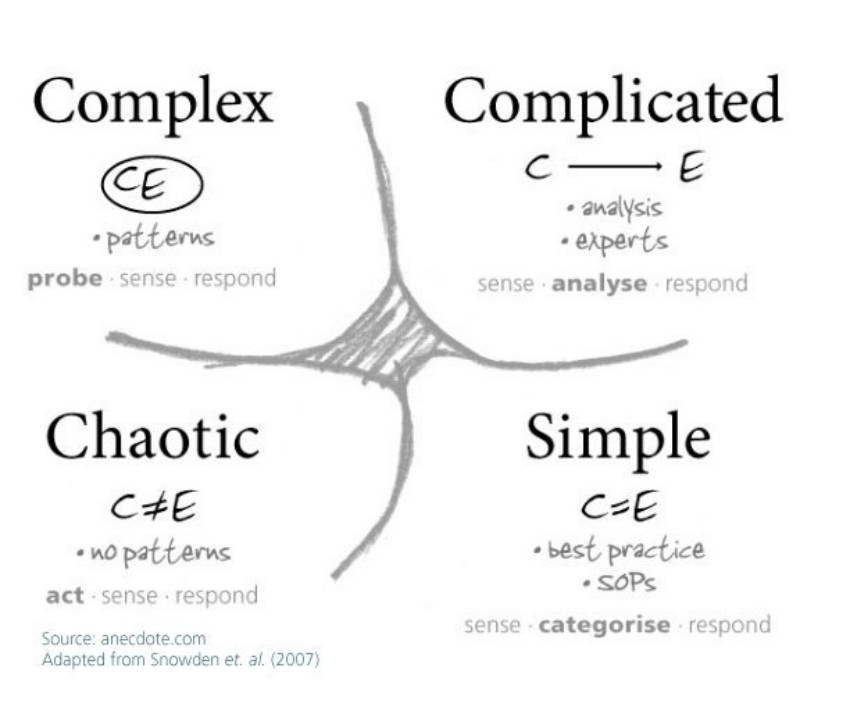

The Cynefin (pronounced cun-ev-in) framework throws the best light on this problem. Developed by Dave Snowden in 1999, it is based on concepts from knowledge management and organizational strategy. Along with his colleague Mary Boone, he published the framework in the November 2007 issue of the Harvard Business Review.

The framework categorizes organizational activity into four domains: simple, complicated, complex and chaotic.

[The Cynefin framework]

[The Cynefin framework]

The simple domain is where the cause and effect relationship is well known and linear. If you do X you will always get Y. Heavily process-oriented situations, such as loan payment processing, are in this domain. If something goes awry, an employee can usually identify the problem (when, say, a borrower pays less than is required), categorize it (review the loan documents to see how partial payments must be processed), and respond appropriately (not accept the payment or apply the funds according to the terms of the note).

Standard operating procedures and best practices work well in this domain. Our approach to problem solving here is sense, categorize and respond. Most people are very good at problem solving in this domain.

The complicated domain is another domain most of us are really good at. Here the relationships between cause and effect are there, but you’ve got to put some effort into working out the possibilities. There are multiple possibilities for a particular effect, so this domain is really about the analysis. About expertise. About rational, linear, data based on logic.

Imagine that an aircraft is stalled on the runway. It could be because of a mechanical, electrical, or fuel-related issue. We need an expert to diagnose the problem using data. Let’s say he figures out that it is an electrical problem and the fuse is blown. This is now a problem in the simple domain. We follow a prescribed procedure for fuse replacement and the plane can move.

The area that really interests us is the complex domain. It includes things like culture, innovation, leadership, trust—all messy people things. In a government situation it could be the economy, environment, homelessness, etc. This is where the cause and effect relationship is so intertwined that things only make sense in hindsight. There is no one single correct answer. The way to make progress here is to sense the patterns.

The mistake many organizations make is that they deal with these messy people issues as if they were either simple or complicated, and use approaches that work with issues of machines and markets. When you start recognizing them as complex, you can start using different tools, different ways of understanding the problem. This is where stories are very important because they help you see patterns.

Sujit’s approach is a great example of this mistake. His team was trying to address people issues with methods that assume that there is predictability and order.

There are several other problems with this approach. One is hypotheses building. After all, how can an investigation begin without hypotheses? However, the hypotheses the cross-functional team comes up with is limited by the depth of their own understanding of the problem.

Say the organization has a low score in ‘fairness in appraisal’.

Now imagine that I am the cross-functional team leader and I’ve been given the mandate to find out why the employees gave a low score and what we can do next year to remedy that.

I sit with the team and come up with a few hypotheses: “Probably the employees feel that the bell curve process is unfair,” or “It is because of recency—we usually do a January-December appraisal in April and something that the employee does in February impacts it.” Clearly the hypotheses building is limited by the team’s understanding of the issue.

The second problem is, most survey methodologies do not generate any additional clarity. They just prove or reject the hypotheses. That happens because of something called confirmation bias. If I have hypothesis A and B and the respondents say B and C, guess what I’ve heard? B. Because C was never part of my hypothesis.

The third problem is that these surveys do nothing but collect opinions about the hypotheses. And opinions can easily be argued with. Say I come back and present to the senior management that after interacting with 500 employees we believe that the big reason for dissatisfaction in this area is our use of the bell curve—80% of the employees believe that the bell curve is unfair so I think, we should find a new way of appraisals. The CEO looks at me and says "I don’t think the bell curve is the problem. I think the communication about the bell curve was a problem. This year, we should communicate better.”

What just happened? An opinion of a leader superseded the opinion of the rest of the employees. Opinions can always be argued with and a senior person’s opinion is usually going to prevail.

We have been very successfully using stories to make sense of such messy people issues.

If we were asked to identify what was driving the low scores and design interventions, this is how we would go about it. We would first collect stories about appraisals and fairness in appraisal. To do that we would run something we call anecdote circles—group discussions designed to elicit stories. Of course you can’t just say “tell me a story”. We use questions that take people to a moment in time where they experienced the process of appraisal.

After having collected these stories we run a workshop to make sense of them and design interventions. We put up the stories on a wall and call in the leaders. For the first one hour or so, we give them a pad and a pen and say "Just read the stories in silence.” This is when I can almost hear the proverbial penny drop. Because when you are reading a story you can’t argue with it. You can just take it in. What we’re telling the leaders to do is to see if they can sense any patterns emerging in the stories we’ve collected.

Let’s go back to the issue of fairness in appraisals. Say we found a collection of stories which went somewhat on these lines: “I don’t think appraisals in this company are fair. Last year when my appraisal was being done, my boss answered a few SMSs. Once his laptop pinged and he said ‘Oh this is important, give me a moment, I need to answer it.’ And towards the end of the appraisal he waved at someone and said ‘Hey! Could you get coffee, I’ll just come.’ I think it’s just a tick-mark exercise.”

If you get a pattern of stories like this, you would definitely make a better judgment of what interventions to design. You would probably decide to coach the appraisers on active listening or empathetic listening. You might create a zone where appraisals will be done, where people can’t go in with their smart devices. Clearly when the leaders are reading the stories they can’t argue with them. This makes sure that we are really attacking the right problem, rather than taking a decision to communicate the brilliance of the bell curve.

In the complex domain you cannot categorize or analyse the problem. Here you need to probe, sense and respond.

The last domain, the chaotic domain, is where none of the other ways of making sense or analysing a problem works. What you do in a chaotic situation is that you just act. Take any action, put this problem into one of the other quadrants and then look at how you can analyse it.

Think New York 9/11. One plane has gone into the tower, the second plane is coming in. You’re not going to be sitting back to analyse or categorize or try and make sense. You just act. Call the fire brigade. Call the ambulances. Tell the Air Force to ground all planes and so on. Once you’ve taken action and you pushed each of these problems into the other quadrants, you then see what analysis you can do. Luckily, we rarely face such problems in organizations.

We did work with Sujit and his team with these messy people issues and were able to identify the real underlying reasons impacting employee engagement. The interventions designed addressed these real issues and the results were in line with our ambition.

This approach has another huge benefit. Since the method includes listening to a large number of employees, the process of change actually begins at the start of the story collection journey and makes the process of implementation a co-created one.